If you’re responsible for a website then hopefully you’re doing constant testing to find out what works and what doesn’t. If you are, and you’ve done enough split tests, then you’d have no doubt been in this situation.

The Scenario

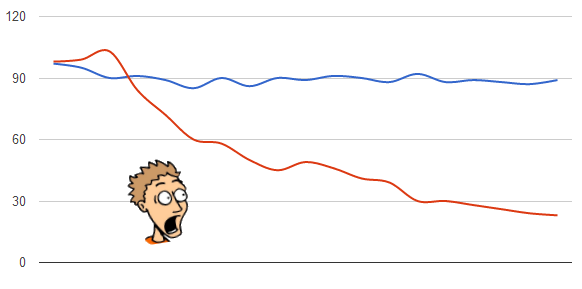

You set up a new test, put it live and wait for the results. You know you’ll have a bit of a wait before you get a statistically relevant result but you keep an eye on the numbers nonetheless. You watch with disappointment as the test seems to be showing that your tweak is having a negative effect on goal completions. You keep watching and see it just perform worse and worse. You can’t afford to lose this many potential customers so you stop the test.

Obviously potential customers really, really hated your new call to action/button colour/image.

Well, no

The laws of chance say that this is likely to happen, especially with a relatively small sample size (ie, before you have enough data for a statistically significant result). The problem is that our brains are hard-wired to see certainty where this is none, to perceive order in randomness.

Intellectually you know this. You know it’s perfectly possible to toss a coin and get 5 tails in a row before you throw a heads. But when you think back to the occasions when you’ve experienced the above, you’re still convincing yourself you were right to abandon the test. But if you had let the test play out long enough then things would have started to even out.

Still need convincing?

You have actually already experienced the proof. Ever run a test that initially gave a brilliant result? Signups increased 90%? You thought you were a bit awesome didn’t you? But as the test approached a statistically significant sample size you saw the huge improvement shrink to a more modest one, or even a negative result. This is exactly the same thing I just described, but instead of throwing five tails in a row, you’re getting heads five times.

That test you abandoned could have given you a positive result once it was completed, but you’ll now never know. Even worse, you’ve corrupted your brain with false information. “Our website visitors don’t like blue buttons” is now one of the things you hold to be true but with no evidence.

Early on in a test the results are just noise. Up until the moment you hit statistical relevance, the data tells you absolutely nothing. It’s NOT a preview of the final result. It’s just random noise.

The Only Solution

The problem is, you’ll still carry on making this mistake. The urge to perceive order or cause and effect where there is none is incredibly strong. Couple that with our innate need to avoid loss (of users, of revenue, of our jobs) and the logical part of your brain doesn’t stand a chance.

The only way to avoid this mistake is to not even look at the data until your system tells you that you’ve got a big enough sample size.

No peeking!

Can you admit to making this mistake? Or are you still in denial. Comment below

Recent Comments